Table of Contents

ToggleWhat is Inter-process Communication (IPC)?

Inter-Process Communication (IPC), or process communication in an operating system (OS), is a fundamental component. IPC, acting as an advanced system, ensures efficient communication between different processes running concurrently within the system.

This optimization improves information exchange among processes, fostering real-time collaboration by notifying processes of specific events. The primary purpose of IPC is to optimize and enhance the exchange of information between these processes. For example, it empowers a process to be immediately notified of a specific event, thereby promoting real-time collaboration.

IPC goes beyond event notifications, facilitating the smooth transfer of data between processes. This functionality not only streamlines overall system operation but also enhances its coherence and responsiveness.

As we explore IPC, we discover how important it is for making different parts of the operating system work together better, to enhance system performance and speed. which makes the system work well and fast.

To understand Inter-Process Communication, we need to understand Process Synchronization, as it forms the foundation for IPC. Additionally, we’ll explore how processes are divided into two categories based on process synchronization in the operating system.

- Independent Processes: Here, the execution of one process has no impact on the execution of another process.

- Cooperative Processes: In this case, the execution of one process affects the execution of another process.

In addition to these concepts, we’ll go deeper into understanding Inter-Process Communication in the operating system through advanced-level examples of process synchronization. These examples truly mirror the design and development of a real operating system, illustrating the intricacies in a way that aligns with the content of this article.

Additionally, it’s worth noting that I’ll explain this topic at an advanced level, providing in-depth knowledge not commonly found in top universities worldwide.

Process Synchronization In Inter-Process Communication (IPC) :

Introduction: In operating systems, the synchronization of processes is important for efficient resource utilization. This article explores the distinction between independent and cooperative processes, the need for synchronization, and methodologies like Inter-Process Communication (IPC).

Independent vs. Cooperative Processes: Processes are categorized into independent and cooperative types. Independent processes operate autonomously, while cooperative processes impact one another, necessitating synchronization.

Independent Processes: Here, the execution of one process has no impact on the execution of another.

Cooperative Processes: In this case, the execution of one process affects the execution of another.

As we’ve learned, cooperative processes necessitate process synchronization. We achieve cooperation among two or more processes using methodologies like IPC (Inter-Process Communication) through ‘Shared Memory Techniques’ or ‘Message Passing’.

Example:

- Consider a process named “Writer” that is currently writing data to a specific area in memory.

- On the other side, there’s another process named “Reader” that is reading the same data from that memory area and sending it to the printer.

- In such a scenario, it is essential for the “Writer” process to synchronize its actions.

Inter-process communication (IPC) serves as an important mechanism enabling processes to talk to each other and coordinate their activities. This communication is like a cooperative dance between processes, ensuring they work together smoothly. Processes achieve this collaboration through two main channels:

Shared Memory: Processes can share a common memory space, allowing them to exchange information directly. It’s like sharing a virtual whiteboard where they jot down notes for each other, ensuring everyone stays on the same page.

Message Passing: Another method involves processes sending messages to each other. It’s akin to passing notes in class, where one process leaves a message for another, allowing them to communicate without directly sharing the same memory space.

This dance of information exchange, whether through shared memory or message passing, enables processes to sync up and accomplish tasks together. It’s a coordinated effort, like a team working in tandem to achieve a common goal.

In addition, IPC ensures that processes, collaborate efficiently, enhancing the overall performance of the operating system.

Need for Process Synchronization (Process Synchronization and Its Importance) :

In today’s systems, such as multiprogramming and time-sharing environments, where multiple processes co-exist in memory simultaneously, In such scenarios synchronization becomes necessary. In such scenarios, processes communicate through inter-process communication, sharing resources among themselves. This ensures seamless (smooth) communication through IPC, allowing processes to share resources efficiently.

Shared Resources and Resource Manager: Consider processes P1, P2, and P3 requiring resources R1, R2, and R3. In a multi-programming setting, the operating system’s resource manager oversees the sharing of hardware, software, or variable resources among these processes.

Note: Resources can be hardware or software entities, and the operating system manages their sharing among processes. Private resources are limited in a multi-programming environment, where resources need to be shared among different processes.

Example of Process Synchronization :

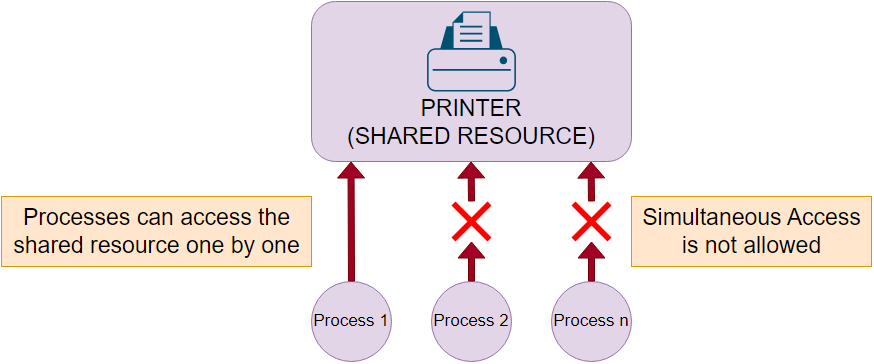

As an example, Consider a printer as an example of a shared resource. You know that different processes can access the printer at different times, meaning the printer is a shared resource. However, if multiple processes attempt to access the printer simultaneously, it’s not possible. If a process tries to do so, it would lead to problems (system issues). Therefore, the concept of process synchronization emphasizes that only one process should access a resource at a time. Otherwise, the system could encounter disruptions.

✪ Practical Problems ✪

In addition to these concepts, we’ll go deeper into understanding Inter-Process Communication in the operating system through advanced-level examples of process synchronization. These examples truly mirror the design and development of a real operating system, illustrating the intricacies in a way that aligns with the content of this article.

Additionally, it’s worth noting that I’ll explain this topic at an advanced level, providing in-depth knowledge not commonly found in top universities worldwide.

Problem #1

Now, let’s explore what happens when different processes attempt to access the same resource simultaneously:

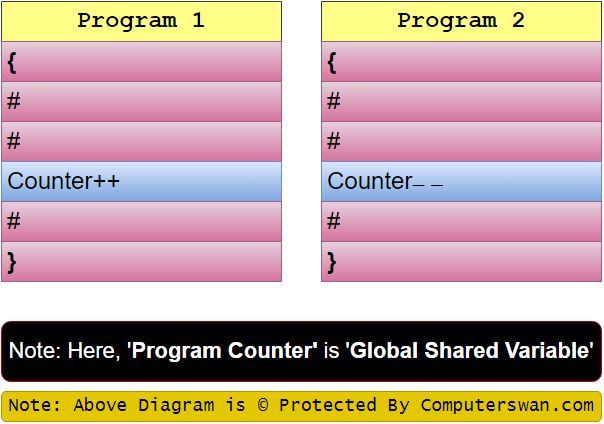

Assume we have a ‘Global Shared Variable‘, defined here as a program counter:

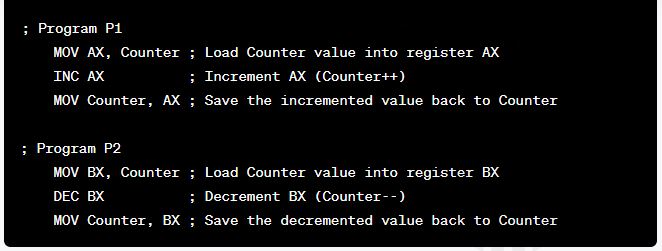

int Counter = 5;Consider two programs, P1 and P2 (see below diagram):

- In Program 1, we observe that the counter is being incremented by 1.

- Simultaneously, in Program 2, the counter is being decremented by 1.

In this scenario, both programs are attempting to manipulate the shared variable ‘Counter‘ at the same time. The potential outcome of this concurrent access depends on the specific mechanisms in place for handling such situations. It highlights the need for careful consideration and implementation of synchronization techniques to avoid conflicts and ensure the consistency of shared resources.

Scenario 1:

If we execute these programs on a single-core processor, what will happen?

- Program P1 executes first, after some time, context switching occurs, and then Program P2 executes. This process repeats through context switching, allowing each program to execute in turn.

Now, let’s focus on our ‘Global Shared Variable’, which we’ve named ‘Counter,’ and observe its value based on the operations of the programs above. int Counter = 5;

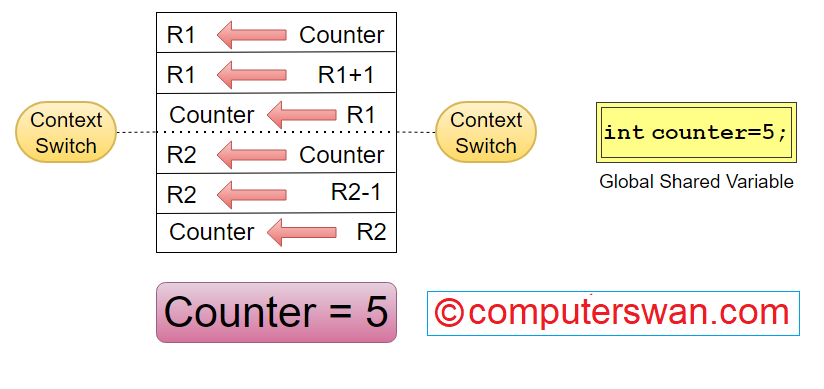

Case 1: In this case, Program P1 executes first:

When Program P1 reads the counter value, it will be 5.

As the program progresses, the counter value will increment, becoming 6, and this value will be saved in the counter.

When Program P2 reads the counter value, it will be 6.

As the program progresses, the counter value will decrement, becoming 5 again, and this value will be saved in the counter.

Final Task Sync Result = 5

Case 2: In this case, Program P2 executes first:

Program P2 reads the counter value, which is 5.

As the program progresses, the counter value decrements to 4, and this value is saved in the counter.

Program P1 reads the counter value, which is 4.

As the program progresses, the counter value increments to 5 again, and this value is saved in the counter.

Final Task Sync Result = 5

Assembly Instructions Case 1:

In Case 1, where Program P1 executes first on a single-core processor, the assembly instructions might look something like this:

These assembly instructions assume a simple assembly language and illustrate the basic operations performed by Program P1 and P2 on the shared variable ‘Counter.’ The specific instructions can vary based on the assembly language and the architecture of the processor being used.

Assembly Instructions Case 1:

In this scenario, for Program P1, the default counter value of 5 is loaded into register R1.

In the next step, the value in the register is incremented by 1, making it now 6.

This incremented value is then saved back to the Counter.

During a context switch, Program P2 is executed:

For Program P2, register R2 is loaded with the value 6 from the Counter.

In the next step, the value in the register is decremented by 1, making it now 5.

This decremented value is then saved back to the Counter.

The final synchronized result is 5.

Assembly Instructions Case 2:

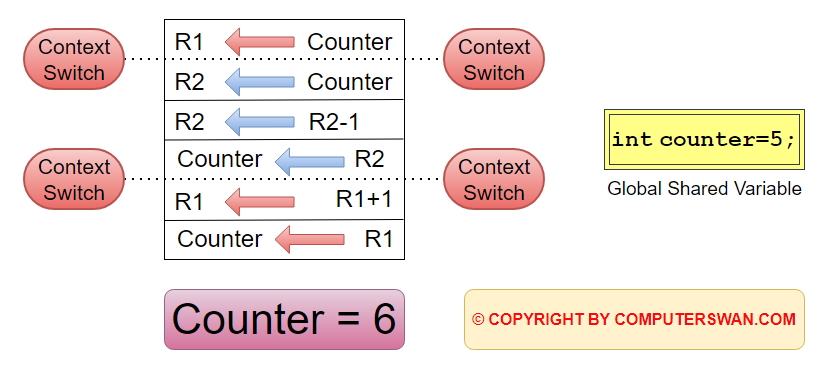

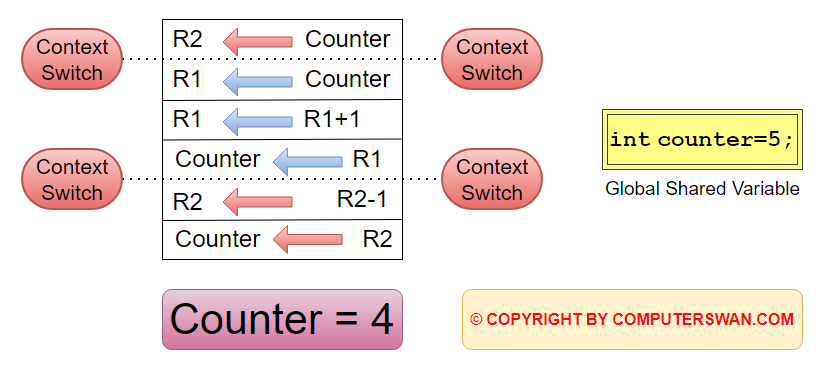

In this case, if the final result could be either 4 or 6, it indicates a synchronization issue in the system. Let’s analyze how the assembly instructions are being executed.

In this case, for Program P1, the value from the Counter (Default value 5) is loaded into register R1.

Then the context switch happened (See the above diagram).

Now the value 5 is loaded from the counter into register R2 of program P2.

Which decremented by 1 and became 4.

Now the value 4 from register R2 is saved back into the counter.

Note that in the next step the context has been switched back, so Program P1 will load from the same place where it was paused.

Here we can see the last time value in register R1 before the context switch was 5.

Now the value in register R1 of program P1 is 5 which is further incremented by 1 i.e. now it becomes 6.

And saved in the counter from register R1.

Final Task Sync Result = 6

Tip – In an operating system, any process can undergo a context switch between any two instructions, but it cannot occur within a single instruction cycle (fetch, decode, execute, reset)

Here, the critical section is not properly protected, leading to a race condition. In between the context switches, Program P1 loads the Counter value from where it left off before the last context switch, increments it, and saves it back. This can result in the final value being 6.

To resolve this issue, proper synchronization mechanisms, such as locks or semaphores, should be implemented to ensure that only one program can access the critical section at a time. This way, the system will avoid conflicts, and the final synchronized result will be consistent.

Assembly Instructions Case 3:

In this scenario, you can observe that program P2 is the first to execute.So first the value 5 from the program counter is loaded into register R2.

Then the context switch happened.

Then value 5 is loaded from the counter into register R1 of program P1.

Then the value incremented by 1 resulting in 6.

Then the value is saved from the register to the counter.

Then the context switch happened.

The value of program P2, which was loaded first before the context switch (i.e., 5), is loaded again.

Which further became 4 by decrement by 1 and got saved in the counter from the register.

Final Task Sync Result = 4

FAQS Related To Inter-process Communication (IPC) :

1. What is Inter-process Communication (IPC)?

- Inter-process Communication (IPC) refers to the methods and mechanisms through which different processes in an operating system communicate with each other.

2. Why is IPC important in operating systems?

- IPC is crucial in operating systems to facilitate communication and data exchange between processes, enabling them to work together efficiently and share information.

3. What are the common methods of IPC?

- Common methods of IPC include message passing, shared memory, and synchronization techniques that allow processes to exchange data and coordinate their actions.

4. How does IPC enhance collaboration between processes?

- IPC enhances collaboration by providing processes with the means to share data, synchronize their activities, and work together towards common goals, improving overall system efficiency.

5. Can you explain the message-passing method in IPC?

- Message passing involves processes exchanging information through messages, enabling communication even if the processes are running on different parts of the system.

6. What is shared memory in the context of IPC?

- Shared memory allows processes to access the same region of memory, enabling them to share data directly, and providing a fast and efficient means of communication.

7. How does IPC contribute to resource sharing among processes?

- IPC ensures efficient resource sharing among processes by allowing them to communicate and coordinate their use of resources such as memory, files, and hardware.

8. What challenges does IPC help address in a multi-process environment?

- In a multi-process environment, IPC addresses challenges related to synchronization, data consistency, and coordination, ensuring seamless collaboration among concurrent processes.

9. How does IPC support communication in distributed systems?

- IPC plays a crucial role in distributed systems by enabling communication between processes running on different machines, and fostering collaboration in networked environments.

10. What role does IPC play in the overall performance of an operating system?

- IPC significantly influences the performance of an operating system by facilitating efficient communication and resource sharing, contributing to overall system responsiveness and effectiveness.

FAQS Related To Process Synchronization in operating systems :

1. What is Process Synchronization in operating systems?

- Process Synchronization is a mechanism that ensures the orderly execution of processes by coordinating their access to shared resources, preventing conflicts, and maintaining data consistency.

2. Why is Process Synchronization important in multi-process environments?

- In multi-process environments, synchronization is crucial to avoid race conditions, and data corruption, and ensure seamless collaboration, contributing to the overall stability of the system.

3. What are the challenges addressed by Process Synchronization?

- Process Synchronization addresses challenges such as deadlock prevention, avoiding resource contention, and maintaining the integrity of shared data in concurrent processing scenarios.

4. How does Process Synchronization contribute to resource sharing?

- Process Synchronization facilitates efficient resource sharing among processes by regulating their access to shared resources, preventing conflicts, and promoting fair utilization.

5. What is the significance of Independent Processes in an operating system?

- Independent processes operate without requiring synchronization and are not affected by the execution of other processes, contributing to parallelism and efficient resource utilization.

6. In what scenarios are Cooperative Processes beneficial?

- Cooperative Processes collaborate and communicate with each other, sharing data and resources, which is advantageous in situations where coordinated activities and information exchange are essential.

7. How do Independent Processes enhance system performance?

- Independent Processes enhance system performance by running concurrently without the need for synchronization, maximizing resource utilization and throughput.

8. What are the drawbacks of excessive reliance on Independent Processes?

- Excessive reliance on Independent Processes can lead to challenges such as data inconsistency, lack of coordination, and potential issues in scenarios where collaboration is necessary.

9. How does Cooperative Process execution impact overall system efficiency?

- Cooperative Process execution promotes efficient collaboration, enabling shared resource utilization and synchronized actions, positively influencing the overall efficiency of the system.

10. Can a combination of Independent and Cooperative Processes be beneficial?

- Yes, a combination of both Independent and Cooperative Processes allows for a flexible and optimized approach, balancing parallelism and collaboration based on the specific requirements of the system.

FAQS Related To Need for Process Synchronization :

1. Why is Process Synchronization essential in operating systems?

- Process Synchronization is vital in operating systems to manage concurrent processes, prevent conflicts, and ensure the orderly execution of tasks.

2. What challenges does Process Synchronization address in multi-programming environments?

- Process Synchronization addresses challenges like resource contention, deadlock prevention, and data consistency in environments with multiple concurrent processes.

3. How does Process Synchronization contribute to seamless communication?

- Process Synchronization facilitates seamless communication between processes through Inter-Process Communication (IPC), allowing efficient sharing of resources and information.

4. What role does Process Synchronization play in preventing race conditions?

- Process Synchronization prevents race conditions by coordinating access to shared resources, ensuring that processes do not interfere with each other’s critical sections.

5. Why is the prevention of data corruption a priority in Process Synchronization?

- Process Synchronization prioritizes preventing data corruption by regulating access to shared data, avoiding conflicts, and maintaining the integrity of information.

6. How does Process Synchronization impact resource-sharing efficiency?

- Process Synchronization enhances resource-sharing efficiency by controlling the access of processes to shared resources, preventing contention, and optimizing utilization.

7. What happens in a system with inadequate Process Synchronization mechanisms?

- In systems lacking effective Process Synchronization, there can be issues like data inconsistency, deadlocks, and inefficient resource usage, leading to system instability.

8. Can Process Synchronization be beneficial in time-sharing environments?

- Yes, Process Synchronization is crucial in time-sharing environments to manage multiple processes efficiently, ensuring fair access to resources and maintaining system stability.

9. How does Process Synchronization contribute to overall system reliability?

- Process Synchronization contributes to overall system reliability by preventing conflicts, ensuring data integrity, and promoting a synchronized execution of processes.

10. What are the benefits of implementing robust Process Synchronization?

- Implementing robust Process Synchronization improves system performance, resource utilization, and stability, positively impacting search engine rankings and user experience.

People Also Ask : FAQs on Context Switch in Operating Systems and Its Need:

1. What is a context switch in operating systems, and why does it occur?

- A context switch in operating systems refers to the process of saving and restoring the state of a CPU for different tasks. It occurs to enable multitasking and switch between processes efficiently.

2. How does a context switch contribute to multitasking in operating systems?

- A context switch allows operating systems to switch between different processes, enabling multitasking by saving the current state of a process and loading the saved state of another.

3. Why is a context switch essential for the concurrent execution of processes?

- A context switch is crucial for concurrent execution as it allows the operating system to allocate CPU time to multiple processes, ensuring efficient utilization and responsiveness.

4. What triggers a context switch in an operating system?

- Context switches are triggered by events such as interrupts, system calls, or scheduling decisions, prompting the OS to save the current context and switch to another process.

5. How does a context switch impact system performance?

- While context switches are necessary for multitasking, frequent context switching can impact system performance by introducing overhead. Optimizing context switch mechanisms is crucial for maintaining efficiency.

People Also Ask : FAQs on Registers in CPU :

1. What are registers in a CPU, and what role do they play in processing data?

- Registers in a CPU are small, fast storage locations used to store data temporarily during program execution. They play a critical role in speeding up data access and manipulation.

2. How do registers contribute to the overall performance of the CPU?

- Registers contribute to CPU performance by providing fast, direct access to data and instructions. They enable quick retrieval and storage, minimizing the time required for processing.

3. What types of registers are commonly found in a CPU, and what are their functions?

- Common types of registers include data registers, address registers, and control registers. They serve functions like storing operands, addresses, and control information, respectively.

4. Why is the size and number of registers important in CPU design?

- The size and number of registers impact CPU performance and efficiency. More and larger registers allow for a larger amount of data to be processed simultaneously, enhancing overall speed.

5. How do registers support the execution of machine instructions in a CPU?

- Registers store operands and intermediate results during the execution of machine instructions, facilitating quick access and manipulation of data, which is crucial for efficient processing.

People Also Ask : FAQs on Assembly Instructions:

1. What are assembly instructions in computer programming?

- Assembly instructions are low-level, human-readable commands that correspond directly to machine instructions. They are used in assembly language programming to write programs for a specific CPU architecture.

2. How do assembly instructions differ from high-level programming languages?

- Assembly instructions are closer to machine code and specific to a CPU architecture, offering more direct control over hardware. High-level languages provide abstraction and are more portable across architectures.

3. Why might programmers use assembly instructions in their code?

- Programmers use assembly instructions when they require fine-grained control over hardware resources, need to optimize code for performance or work in embedded systems with specific requirements.

4. How does the assembly language relate to machine code, and why is it considered a low-level language?

- Assembly language is a low-level language as it closely corresponds to machine code. Each assembly instruction typically represents a specific machine-level operation, making it closer to the hardware.

5. Can assembly instructions enhance program efficiency, and in what scenarios are they beneficial?

- Yes, assembly instructions can enhance program efficiency by allowing programmers to optimize code for a specific architecture. They are beneficial in scenarios where performance is critical and fine-tuning is required.

People Also Ask : FAQs on IPC Techniques in Linux:

1. What is IPC, and why is it essential in Linux systems?

- Inter-Process Communication (IPC) in Linux involves communication between processes, enabling data exchange. It’s crucial for collaboration and coordination between different processes in a Linux environment.

2. What are the common IPC techniques used in Linux?

- Linux employs several IPC techniques, including shared memory, message queues, semaphores, and pipes. Each technique serves specific communication needs between processes.

3. How does Shared Memory work as an IPC mechanism in Linux?

- Shared Memory in Linux allows multiple processes to access a common memory segment, facilitating fast data exchange. It is one of the most efficient IPC mechanisms for high-speed communication.

4. What is the role of Message Queues in Linux IPC?

- Message Queues provide a way for processes to communicate by sending and receiving messages. Linux IPC Message Queues offer a reliable and orderly means of data exchange.

5. How do Semaphores contribute to synchronization in Linux IPC?

- Semaphores in Linux IPC ensure synchronization between processes by controlling access to shared resources. They help in preventing conflicts and maintaining order in concurrent execution.

6. What are Pipes, and how are they used for IPC in Linux?

- Pipes create a unidirectional communication channel between processes. In Linux, pipes facilitate IPC by allowing data flow from one process to another, enhancing collaboration.

7. How can File-Based IPC mechanisms enhance communication in Linux?

- File-based IPC mechanisms, like named pipes or System V IPC, use files as a means of communication between processes. Understanding these mechanisms is essential for efficient Linux IPC.

8. Which IPC technique is suitable for different communication scenarios in Linux?

- Choosing the right IPC technique depends on the communication requirements. Shared Memory is ideal for high-speed data exchange, while Message Queues are suitable for orderly message-based communication.

9. Can processes on different Linux systems communicate using IPC?

- Yes, processes on different Linux systems can communicate using IPC techniques like sockets. Networking mechanisms extend IPC capabilities beyond a single system.

10. How can programmers implement IPC in Linux applications efficiently?

- Programmers can efficiently implement IPC in Linux applications by selecting the appropriate technique based on communication needs, understanding synchronization, and leveraging Linux system calls for effective implementation.

People Also Ask : Understanding Inter-Process Communication (IPC) in Operating Systems with Examples:

1. What is Inter-Process Communication (IPC) in Operating Systems?

- Inter-Process Communication (IPC) refers to the mechanisms that enable communication and data exchange between different processes running concurrently in an operating system.

2. Why is IPC crucial in operating systems?

- IPC is essential for processes to collaborate, share data, and synchronize their activities, facilitating efficient and coordinated execution in operating systems.

3. What are the common IPC mechanisms in operating systems?

- Operating systems employ various IPC mechanisms, including shared memory, message passing, pipes, and sockets, to enable communication and interaction between processes.

4. How does Shared Memory work as an IPC mechanism?

- Shared Memory allows multiple processes to access the same region of memory, enabling them to exchange data directly. It’s a fast and efficient IPC method commonly used for high-performance applications.

5. Can you provide an example of IPC using Message Passing?

- Certainly! In IPC Message Passing, a sender process can send a message to a receiver process. For example, a producer process can send a message to a consumer process indicating the availability of new data.

6. What role do Pipes play in IPC, and can you provide a simple example?

- Pipes establish communication channels between processes. In a shell command, the output of one process can be piped as input to another process. For instance, “ls | grep keyword” uses a pipe to filter the output.

7. How do Sockets contribute to IPC, and can they be used for inter-machine communication?

- Sockets facilitate communication between processes, even on different machines. They are widely used for networking applications, enabling IPC over a network, making them a versatile IPC mechanism.

8. What challenges can arise with IPC in operating systems?

- Challenges in IPC include synchronization issues, data consistency problems, and the potential for deadlock situations. Addressing these challenges is crucial for ensuring robust IPC implementations.

9. How does IPC enhance multitasking in operating systems?

- IPC enables processes to share information and work together, enhancing multitasking capabilities in operating systems. It allows efficient resource utilization and coordination among concurrently executing processes.

10. Can you provide tips for the effective implementation of IPC in operating system applications?

- Effective implementation of IPC involves choosing the right mechanism based on communication requirements, handling synchronization carefully, and employing appropriate system calls for reliable and secure communication in operating systems.

Examples of Inter-Process Communication (IPC) in Operating Systems:

1. How can Shared Memory be utilized for IPC in a practical scenario?

- Example: Consider a scenario where two processes, a producer and a consumer, share a region of memory. The producer writes data to this shared memory, and the consumer reads from it, allowing them to exchange information efficiently.

2. Provide a real-world example illustrating the use of Message Passing in IPC.

- Example: In a messaging application, one process (sender) can use message passing to send a text message to another process (receiver). This facilitates communication between different components of the messaging system.

3. How does IPC using Pipes work with a practical application?

- Example: Imagine a shell script that uses a pipe to filter and process data. For instance, “cat log.txt | grep error” uses a pipe to send the content of a log file to a grep process, filtering out lines containing the word “error.”

4. Can you give an example of Sockets in IPC for inter-machine communication?

- Example: In a client-server architecture, a server process communicates with multiple client processes using sockets. The server listens on a specific IP address and port, while clients connect to the server to exchange data.

5. Illustrate a scenario where IPC challenges like synchronization are addressed effectively.

- Example: Consider a banking application where multiple processes handle transactions simultaneously. By using synchronization mechanisms like locks or semaphores, the processes ensure that concurrent transactions do not lead to data inconsistencies.

6. How can IPC contribute to multitasking in an operating system with an example?

- Example: In a multitasking environment, a word processing application may use IPC to communicate with a spell-checking process. This allows the word processor to continue running smoothly while the spell-checker operates independently, enhancing overall system multitasking capabilities.

7. Provide a tip for implementing secure IPC in an operating system application.

- Tip: Use encryption and authentication mechanisms when implementing IPC, especially in scenarios involving sensitive data exchange. This ensures secure communication between processes and protects against unauthorized access.

8. Can you share an example where IPC is essential for efficient resource utilization?

- Example: In a graphics processing application, rendering and user interface processes may use IPC to share information about the current state. This allows them to work together efficiently, enhancing resource utilization for a seamless user experience.

9. How does IPC help prevent deadlock situations in operating systems?

- Explanation: Properly designed IPC mechanisms include deadlock prevention strategies, such as avoiding circular waits and ensuring resource allocation follows a predefined order. These measures help minimize the risk of deadlock occurrences in complex systems.

10. Explain the significance of IPC in collaborative software development with an example.

- Example: In a version control system, IPC allows different processes to communicate and coordinate changes to a shared codebase. This ensures collaborative development, where multiple developers can work on the same project simultaneously while maintaining data integrity.

FAQs on Common IPC Mechanisms in Operating Systems:

1. What is IPC, and why is it crucial in operating systems?

- Answer: IPC, or Inter-Process Communication, is essential for facilitating communication and data exchange between processes in an operating system. It allows processes to work collaboratively, share information, and synchronize their actions.

2. What are the primary reasons for using IPC mechanisms in an operating system?

- Answer: IPC mechanisms are employed to enable communication, coordination, and data sharing among processes. They play a vital role in multitasking, ensuring efficient resource utilization, and facilitating collaboration between different components of a system.

3. Which are the most common IPC mechanisms used in operating systems?

- Answer: The common IPC mechanisms include Shared Memory, Message Passing, Pipes, Sockets, and Signals. Each mechanism serves specific communication needs and is employed based on the requirements of the processes involved.

4. How does Shared Memory work as an IPC mechanism, and where is it commonly used?

- Answer: Shared Memory allows processes to share a common region of memory. Changes made by one process are immediately visible to others. It is commonly used in scenarios where fast and direct communication is required, such as in producer-consumer relationships.

5. Can you explain the concept of Message Passing in IPC and its applications?

- Answer: Message Passing involves processes exchanging data through messages. It is commonly used in scenarios where communication between processes is achieved by sending and receiving well-defined messages. Examples include communication in distributed systems and network protocols.

6. What role do Pipes play as an IPC mechanism, and in what scenarios are they beneficial?

- Answer: Pipes facilitate one-way communication between processes. They are beneficial when sequential data processing is required. For instance, pipes are commonly used in shell commands to pass output from one process as input to another.

7. How are Sockets utilized as an IPC mechanism, and where are they prominently applied?

- Answer: Sockets provide bidirectional communication between processes over a network. They are widely used in client-server architectures and networked applications. Sockets enable communication between processes running on different machines.

8. What is the significance of Signals in IPC, and how are they employed in operating systems?

- Answer: Signals are software interrupts that notify processes about specific events. They are used for various purposes, such as process termination, handling exceptional conditions, or requesting attention. Signals provide a way for processes to communicate asynchronously.

9. How do IPC mechanisms contribute to synchronization and coordination between processes?

- Answer: IPC mechanisms play a crucial role in synchronization by providing a means for processes to coordinate their actions. For example, synchronization primitives like semaphores and mutexes are often implemented using shared memory or message passing.

10. Which IPC mechanism is suitable for communication between unrelated processes on different machines?

- Answer: Sockets are commonly used for communication between unrelated processes running on different machines. They provide a network interface, allowing processes to communicate over a network, making them versatile for distributed systems and client-server applications.

People Also Ask : Inter-Process Communication In Distributed System:

1. What role does Inter-Process Communication (IPC) play in a Distributed System?

- Answer: IPC in a Distributed System enables processes running on different machines to communicate and share information. It facilitates coordination and data exchange in a networked environment.

2. How does IPC contribute to the scalability of Distributed Systems?

- Answer: IPC allows processes in a Distributed System to collaborate efficiently, promoting scalability. It enables seamless communication between components, supporting the system’s growth and adaptability.

3. Which IPC mechanisms are commonly used in Distributed Systems, and why?

- Answer: Common IPC mechanisms in Distributed Systems include Message Passing, Remote Procedure Calls (RPC), and Sockets. These mechanisms ensure effective communication and coordination among geographically dispersed processes.

4. Can you explain the significance of IPC in ensuring fault tolerance in a Distributed System?

- Answer: IPC enhances fault tolerance by enabling processes in a Distributed System to exchange information about system health. This communication aids in making informed decisions and adapting to changes or failures.

5. How does IPC address the challenges of communication latency in a geographically distributed environment?

- Answer: IPC mechanisms in Distributed Systems are designed to optimize communication latency. Techniques such as asynchronous message passing and intelligent routing contribute to minimizing delays in information exchange.

People Also Ask : Inter-Process Communication and Synchronization:

1. What is the relationship between Inter-Process Communication (IPC) and process synchronization?

- Answer: IPC and process synchronization are closely linked concepts. IPC facilitates communication between processes, while synchronization ensures orderly execution and prevents conflicts, especially in shared resource scenarios.

2. How do IPC mechanisms contribute to achieving synchronization between concurrent processes?

- Answer: IPC mechanisms provide synchronization primitives like semaphores and mutexes, allowing processes to coordinate their actions. These synchronization tools help avoid race conditions and maintain the consistency of shared resources.

3. Can you provide examples of scenarios where IPC is crucial for synchronization in multitasking environments?

- Answer: In multitasking environments, IPC ensures synchronization in scenarios such as producer-consumer relationships, where processes need to coordinate the production and consumption of shared resources.

4. Why is proper synchronization essential for preventing deadlock situations in concurrent systems using IPC?

- Answer: Synchronization through IPC prevents deadlock situations by regulating access to shared resources. Deadlocks can occur when processes are waiting for resources held by others, and proper synchronization helps avoid such scenarios.

5. How does IPC contribute to achieving mutual exclusion, and why is it crucial in concurrent processing?

- Answer: IPC provides mechanisms like semaphores and locks, enabling processes to enforce mutual exclusion. This ensures that only one process accesses a critical section at a time, preventing interference and maintaining data integrity.

People Also Ask : Inter-Process Communication Types:

1. What are the main types of Inter-Process Communication (IPC), and how do they differ?

- Answer: The main types of IPC are Shared Memory, Message Passing, Sockets, Pipes, and Signals. They differ in how processes communicate, share data, and synchronize their actions.

2. When is Shared Memory preferred as an IPC mechanism, and what advantages does it offer?

- Answer: Shared Memory is preferred when processes need fast and direct communication. It allows multiple processes to access the same region of memory, offering high-performance data sharing.

3. How does Message Passing differ from Shared Memory as an IPC mechanism, and in what scenarios is it commonly used?

- Answer: Message Passing involves processes exchanging data through messages, providing a more structured form of communication than Shared Memory. It is commonly used in distributed systems and situations requiring well-defined message exchange.

4. Can you explain the role of Sockets as an IPC mechanism and where they find prominent applications?

- Answer: Sockets provide bidirectional communication between processes over a network. They are widely used in client-server architectures, networked applications, and scenarios where processes need to communicate across different machines.

5. How are Pipes utilized for IPC, and in what scenarios do they excel as a communication mechanism between processes?

- Answer: Pipes facilitate one-way communication between processes. They excel in scenarios where sequential data processing is required, such as connecting the output of one process as the input to another in a shell command.

People Also Ask : Inter-Process Communication in C++ :

1. How does C++ support Inter-Process Communication, and what are the key features of IPC in C++?

- Answer: C++ supports IPC through various mechanisms like Shared Memory, Message Queues, and Sockets. Key features include the ability to create robust communication channels between C++ processes.

2. Which IPC mechanism is commonly used in C++ for communication between processes within the same system?

- Answer: Shared Memory is commonly used in C++ for IPC when processes are running on the same system. It allows multiple C++ processes to share data efficiently.

3. Can C++ programs use Sockets for IPC, and what advantages does it bring for cross-platform communication?

- Answer: Yes, C++ programs can use Sockets for IPC, providing a cross-platform solution for communication between processes. Sockets enable C++ applications to communicate over networks, making them versatile for various scenarios.

4. How can C++ developers implement Message Passing for IPC, and what benefits does it offer in a multi-process environment?

- Answer: C++ developers can implement Message Passing for IPC using libraries or frameworks that support message-based communication. Message Passing is beneficial in multi-process environments for structured and reliable data exchange.

5. Are there any C++ libraries or tools that facilitate easy implementation of Inter-Process Communication?

- Answer: Yes, several C++ libraries and tools simplify IPC implementation. Examples include Boost.Interprocess, ZeroMQ, and Poco C++ Libraries, providing C++ developers with convenient solutions for different IPC scenarios.